If you run continuous deployment today, you need high-performance testing. You know the key takeaway shared by our guest presenter, Priyanka Halder: test speed matters.

Priyanka Halder presented her approach to achieving success in a hyper-growth company through her webinar for Applitools in January 2020. The title of her speech sums up her experience at GoodRx:

“High-Performance Testing: Acing Automation In Hyper-Growth Environments.”

Hyper-growth environments focus on speed and agility. Priyanka focuses on the approach that lets GoodRx not only develop but also test features and releases while growing at an exponential rate.

About Priyanka

Priyanka Halder is head of quality at GoodRx, a startup focused on finding all the providers of a given medication for a patient – including non-brand substitutes – and helping over 10 million Americans find the best prices for those medications. Priyanka joined in 2018 as head of quality engineering – with a staff of just one quality engineer. She has since grown the team 1200% and grown her team’s capabilities to deliver test speed, test coverage, and product reliability. As she explains, past experience drives current success.

Priyanka’s career includes over a dozen years of test experience at companies ranging from startups to billion-dollar companies. She has extensive QA experience in managing large teams and deploying innovative technologies and processes, such as visual validation, test stabilization pipelines, and CICD. Priyanka also speaks regularly at testing and technology conferences. She accepted invitations to give variations of this particular talk eight times in 2019.

One interesting note: she says she would lik to prove to the world that 100% bug-free software does not exist.

Start With The Right Foundation

Priyanka, as a mother, knows the value of stories. She sees the story of the Three Little Pigs as instructive for anyone trying to build a successful test solution in a hyper-growth environment. Everyone knows the story: three pigs each build their own home to protect themselves from a wolf. The first little pig builds a straw house in a couple of hours. The second little pig builds a home from wood in a day. The third little pig builds a solid infrastructure of brick and mortar – and that took a number of days. When the wolf comes to eat the pigs, he can blow down the straw house and the wood house, but the solid house saves the pigs inside.

Priyanka shares from her own experience. – She encounters many wolves in a hyper-growth environment. The only safeguard comes from building a strong foundation. Priyanka describes a hyper-growth environment and how high-performance testing works. She describes the technology and team needed for high-performance testing. And, she describes what she delivered (and continues to deliver) at GoodRx.

Define High-Performance Testing

So, what is high-performance testing?

Fundamentally, high-performance testing maximizes quality in a hyper-growth startup. To succeed, she says, you must embrace the ever-changing startup mentality, be one step ahead, and constantly provide high-quality output without being burned out.

Agile startups share many common characteristics:

- Chaotic – you need to be comfortable with change

- Less time – all hands on deck all the time for all the issues

- Less resources – you have to build a team where veterans are mentors and not enemies

- Market pressure – teams need to understand and assess risk

- Reward – do it right and get some clear benefits and perks

If you do it right, it can lead to satisfaction. If you do it wrong, it leads to burnout. So – how do you do it right?

Why High-Performance Testing?

Leveraging data collected by another company Priyanka showed how the technology for app businesses changed drastically over the past decade. These differences include:

- Scope – instead of running a dedicated app, or on a single browser, today’s apps run on multiple platforms (web app and mobile)

- Frequency – we release apps on demand (not annually, quarterly, monthly or daily)

- Process – we have gone from waterfall to continuous delivery

- Framework – we used to use singe-stack on premise software – today we are using open source, best of breed, cloud based solutions for developing and delivering.

The assumptions of “test last” that may have worked a decade back can’t work anymore. So, we need a new paradigm.

How To Achieve High-Performance Testing

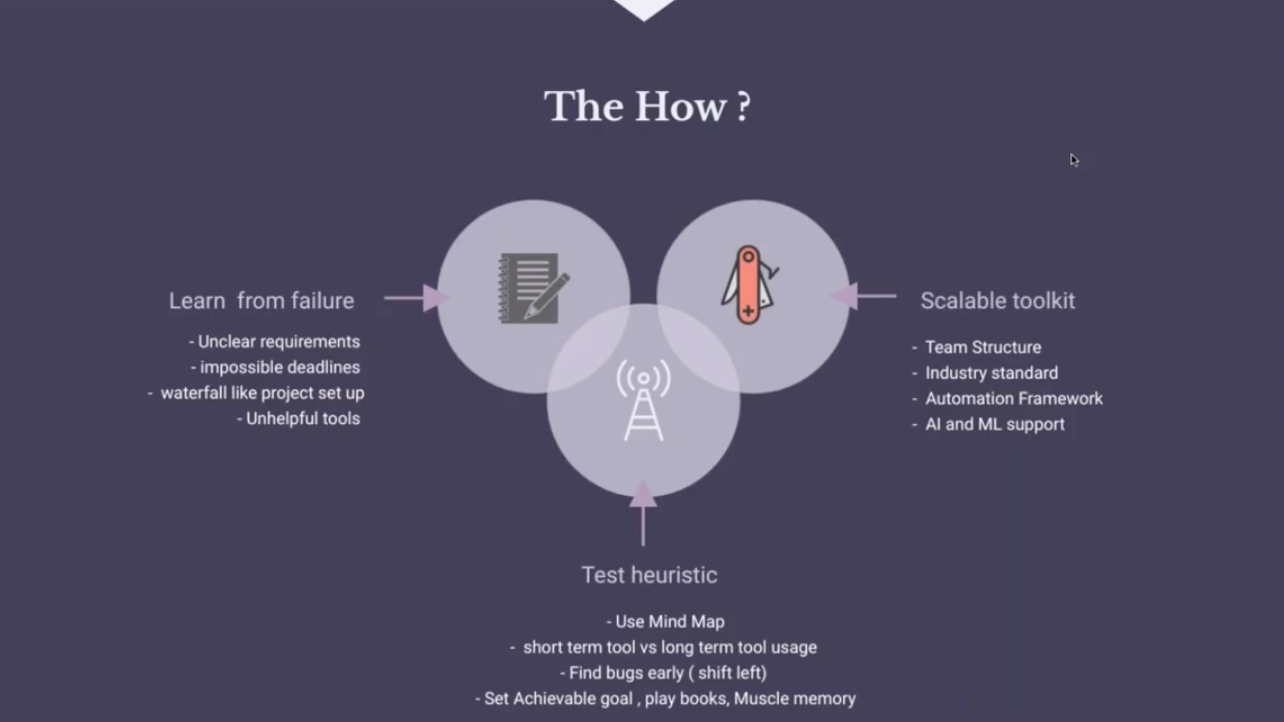

Priyanka talked about her own experience. Among other things, teams need to know that they will fail early as they try to meet the demands of a hyper-growth environment. Her approach, based on her own experiences, is to ask questions:

- Does the team appreciate that failures can happen?

- Does the team have inconsistencies? Do they have unclear requirements? Set impossible deadlines? Use waterfall while claiming to be agile? Note those down.

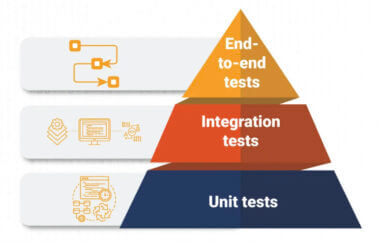

Once you know the existing situation, you can start to resolve contradictions and issues. For example, you can use a mind map to visualize the situation. You can divide issues and focus on short term work (feature team for testing) vs. long term work (framework team). Another important goal – figure out how to find bugs early (aka Shift Left). Understand which tools are in place and which you might need. Know where you stand today vis-a-vis industry standards for release throughput and quality. Lastly, know the strength of your team today for building an automation framework, and get AI and ML support to gain efficiencies.

Building a Team

Next, Priyanka spoke about what you need to build a team for high-performance testing.

In the past, we used to have a service team. They were the QA team and had their own identity. Today, we have true agile teams, with integrated pods where quality engineers are the resource for their group and integrate into the entire development and delivery process.

So, in part you need skills. You need engineers who know test approaches that can help their team create high-quality products. Some need to be familiar with behavior-driven design or test-driven design. Some need to know the automation tools you have chosen to use. And, some need to be thinking about design-for-testability.

One huge part of test automation involves framework. You need a skill set familiar with building code that self-identifies element locators, builds hooks for automation controls, and ensures consistency between builds for automation repeatability.

Beyond skills, you need individuals with confidence and flexibility. They need to meld well with the other teams. In a truly agile group, team members distribute themselves through the product teams as test resources. While they may connect to the main quality engineering team, they still must be able to function as part of their own pod.

Test Automation

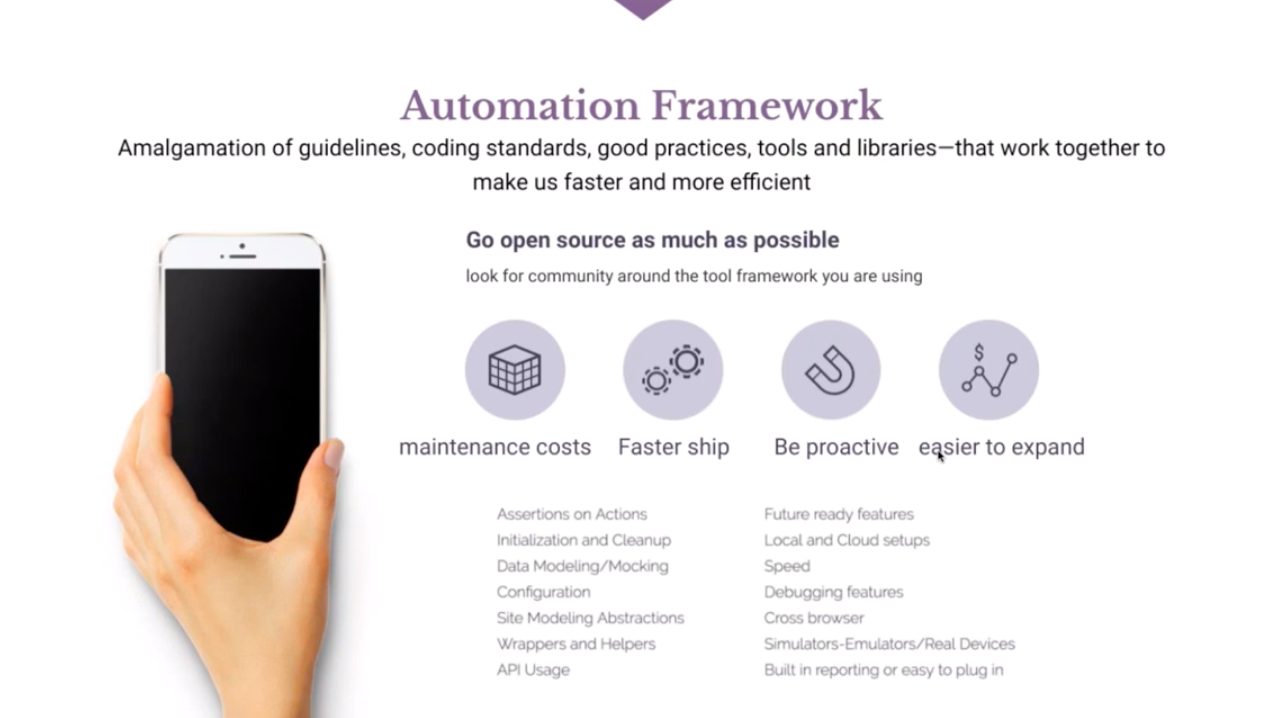

Priyanka asserts that good automation makes high-performance testing possible.

In days gone by, you might have bought tools from a single vendor. Today, open source solutions provide a rich source for automation solutions. Open source generally has lower maintenance costs, generally lets you ship faster, and expands more easily.

Open source tools come with communities of users who document best practices for using those tools. You might even learn best-practice processes for integrating with other tools. The communities give you valuable lessons so you can learn without having to fail (or learn from the failures of others).

Priyanka describes aspects of software deployment processes that you can automate. Among the features and capabilities you can automate:

- Assertions on Action

- Initialization and Cleanup

- Data Modeling/Mocking

- Configuration

- Safe Modeling Abstractions

- Wrappers and Helpers

- API Usage

- Future-ready Features

- Local and Cloud Setups

- Speed

- Debugging Features

- Cross Browser

- Simulators/Emulators/Real Devices

- Built-in reporting or easy to plug in

Industry Standards

You can measure all sorts of values from testing. Quality, of course. But what else? What are the standards these days? Who knows what are typical test times for test automation?

Priyanka shares data from Sauce Labs about standards. Sauce surveyed a number companies and discussed benchmark settings for four categories: test quality; test run time; test platform coverage; and test concurrency. The technical leaders at these companies set some benchmarks they thought aligned with best-in-class industry standards.

In detail:

- Quality – pass at least 90% of all tests run

- Run Time – average of all tests run two minutes or less

- Platform Coverage – tests cover five critical platforms on average

- Concurrency – at peak usage, tests utilize at least 75% of available capacity

Next, Priyanka shared the data Sauce collected from the same companies about how they fared against the average benchmarks discussed.

- Quality – 18% of the companies achieved 90% pass rate

- Run time – 36% achieved the 2 minute or less average

- Platform coverage – 63% reached the five platform overage

- Concurrency – 71% achieved the 75% utilization mark

- However, only 6.2% of the companies achieved the mark on all four.

Test speed became a noticeable issue. While 36% ran on average in two minutes or faster, a large number of companies exceeded five minutes – more than double.

Investigating Benchmarks

These benchmarks are fascinating – especially run time – because test speed is key to faster overall delivery. The longer you have to wait for testing to finish, the slower your dev release cycle times.

Sadly, lots of companies think they’re acing automation, but so few are meeting key benchmarks. Just having automation doesn’t help. It’s important to use automation that helps meet these key benchmarks.

Another area worth investigating involves platform coverage. While Chrome remains everyone’s favorite browser, not everyone is on Chrome. Perhaps 2/3 of users run Chrome, but Firefox, Safari, Edge and others still command attention. More importantly, lots of companies want to run mobile, but only 8.1% of company tests run on mobile. Almost 92% of companies run desktop tests and then resize their windows for the mobile device. Of the mobile tests, only 8.9% run iOS native apps and 13.2% run Android native apps. There’s a gap at a lot of companies.

GoodRx Strategies

Priyanka dove into the capabilities that allow GoodRx to solve the high- performance testing issues.

Test In Production

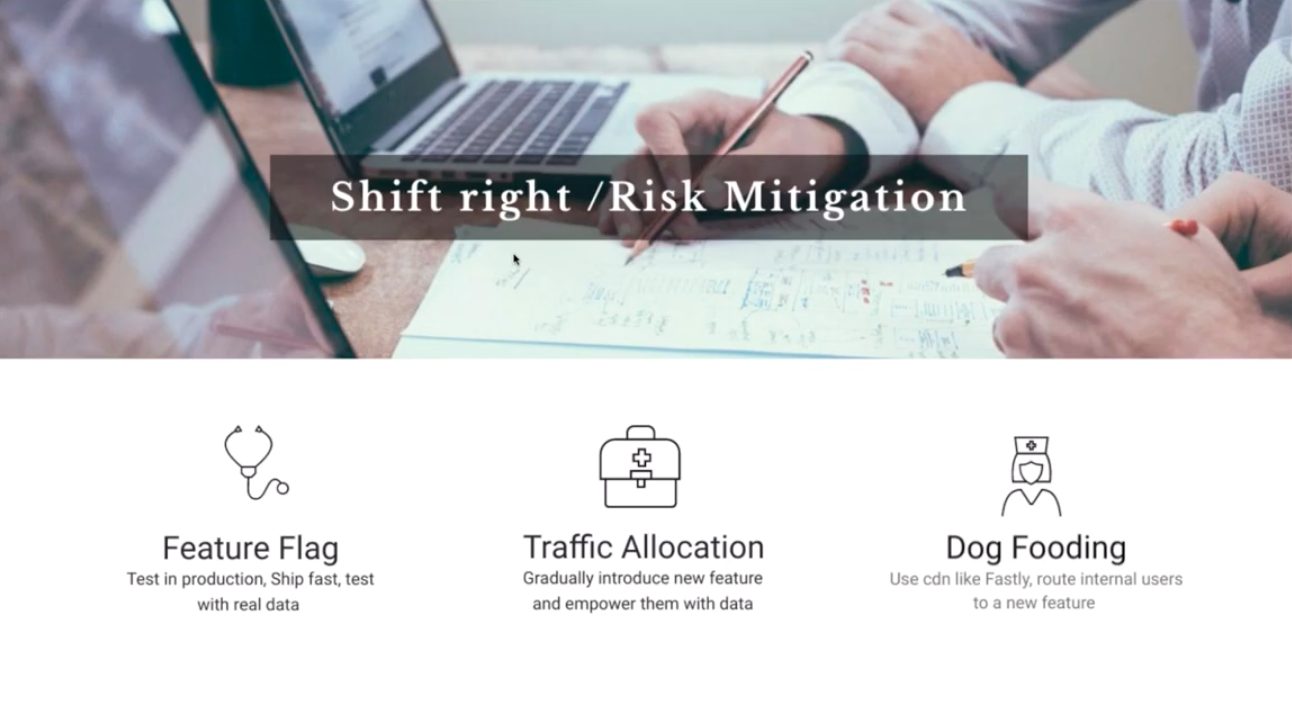

The first capabilities GoodRx uses a Shift Right approach that moves testing into the realm of production.

Production testing? Yup – but it’s not spray-and-pray. GoodRx’s approach includes the following:

- Feature Flag – Test in production. Ship fast, test with real data.

- Traffic Allocation – gradually introduce new features and empower targeted users with data. Hugely important for finding corner cases without impacting the entire customer base.

- Dog Fooding – use a CDN like Fastly to deploy, route internal users to new features.

The net reduce – this reduces overhead, lets the app get tested with real data test sets, and identify issues without impacting the entire customer base. So, the big release becomes a set of small releases on a common code base, tested by different people to ensure that the bulk of your customer base doesn’t get a rude awakening.

AI/ML

Next, Priyanka talked about GoodRx uses AI/ML tools to augment her team. These tools make her team more productive – allowing her to meet the quality needs of the high-performance environment.

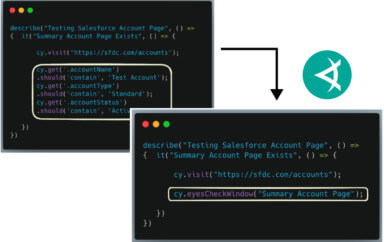

First, Priyanka discussed automated visual regression – using AI/ML to automate the validation of rendered pages. Here, she talked about using Applitools – as she says, the acknowledged leader in the field. Priyanka talked about how GoodRx uses Applitools.

At GoodRx, there may be one page used for a transaction. But, GoodRx supports hundreds of drugs in detail, and a user can dive into those pages that describe the indications and cautions about individual medications. To ensure that those pages remain consistent, GoodRx validates these pages using Applitools. Trying to validate these pages manually would take six hours. Applitools validates these pages in minutes and allows GoodRx to release multiple times a day.

To show this, Priyanka used an example of visual differences. She showed a kids cartoon with visual differences. Then she showed what happens if you do a normal image comparison – pixel-based comparison.

A bit-wise comparison will fail too frequently. Using the Applitools AI system, they can set up Applitools to look at the images that have already been approved and quickly validate the pages being tested.

Applitools can complete a full visual regression in less than 12 minutes to run 350 test cases, which runs 2,500 checks. Manually, it takes six hours.

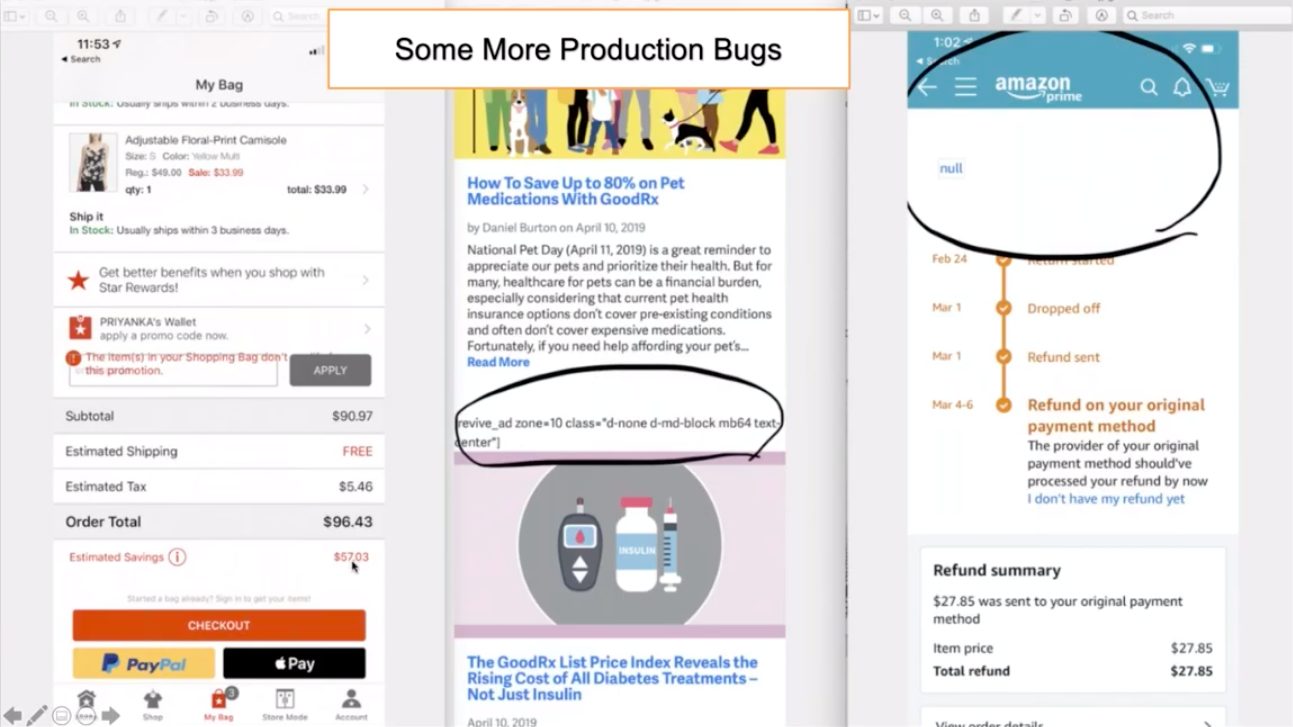

Priyanka showed the kinds of real-world bugs that Applitools uncovered. One – a screenshot from her own site GoodRx. A second from amazon.com, and a third from macys.com. She showed examples with corrupt display – and ones that Selenium alone could not catch.

ReportPortal.io

Next, Priyanka moved on to ReportPortal.io. As she says, when you ace automation, you need to know where you stand. You need to build trust around your automation platform by showing how it is behaving. All your data – test times, bugs discovered, etc. reportportal.io shows how tests are running at different times of the day. Another display shows flakiest tests and longest-running tests to help the team release seamlessly and improve their statistics.

Any failed test case in reportportal.io can link the test results log directly into the reportportal.io user interface.

GoodRx uses behavior-driven design (BDD), and their BDD approach lets them describe the behavior they want for a given feature – how it should behave in good and bad cases – and ensure that those cases get covered.

High-Performance Testing – The Burn Out

Priyanka made it clear that high-performance environments take a toll on people. Everywhere.

She showed a slide referencing a blog by Atlassian talking about work burnout symptoms – and prevention. From her perspective, the symptoms of workplace stress include:

- Being cynical or critical at work

- Dragging yourself to work and having trouble getting started

- Irritable or impassion, lack energy, hard to concentrate, headache

- Lack of satisfaction from achievement

- Use food, drugs or alcohol to feel better or simply not to feel

So, what should a good team lead do when she notices signs of burnout? Remind people to take steps to prevent burnout. These include:

- Avoid unachievable deadlines. Don’t take on too much work. Estimate, add buffer, add resource.

- Do what gives you energy – avoid what drains you

- Manage digital distraction – the grass will always be greener on the other side

- Do something outside your work – Engage in activities that bring you joy

- Say No too many projects – gauge your bandwidth and communicate

- Make self-care a priority – meditation/yoga/massage

- Have a strong support system – talk to you family, friends, seek help

- Unplugging for short periods helps immensely

The point here is that hyper-growth environments can take a toll on everyone – employees, managers. Unrealistic demands can permeate the organization. Use care to make sure that this doesn’t happen to you or your team.

GoodRx Case Study

Why not look at Priyanka’s direct experience at GoodRx? Her employer, GoodRx, provides prices transparency for drugs. GoodRx lets individuals search for drugs they might need or use for various conditions. Once an individual selects a drug, GoodRx lets the individual see the prices for that drug in various locations to find the best price for that drug.

The main customers are people who don’t have insurance or have high-deductible insurance. In some cases, GoodRx offers coupons to keep the prices low. GoodRx also provides GoodRx Care – a telemedicine consultation system – to help answer patient questions about drugs. Rather than see a doctor, GoodRx Care costs anywhere between $5 and $20 for a consultation.

Because the GoodRx web application provides high value for its customers, often with high demand, the app must maintain proper function, high performance, and high availability.

Set Goals

The QA goals Priyanka designed needed to meet the demands of this application. Her goals included:

- Distributed QA team 24/7 QA support

- Dedicated SDET Team who specializes in test

- A robust framework that will make any POC super simple (plug and play)

- Test stabilization pipeline using Travis

- 100% automation support to reduce regression time 90%

Build a Team

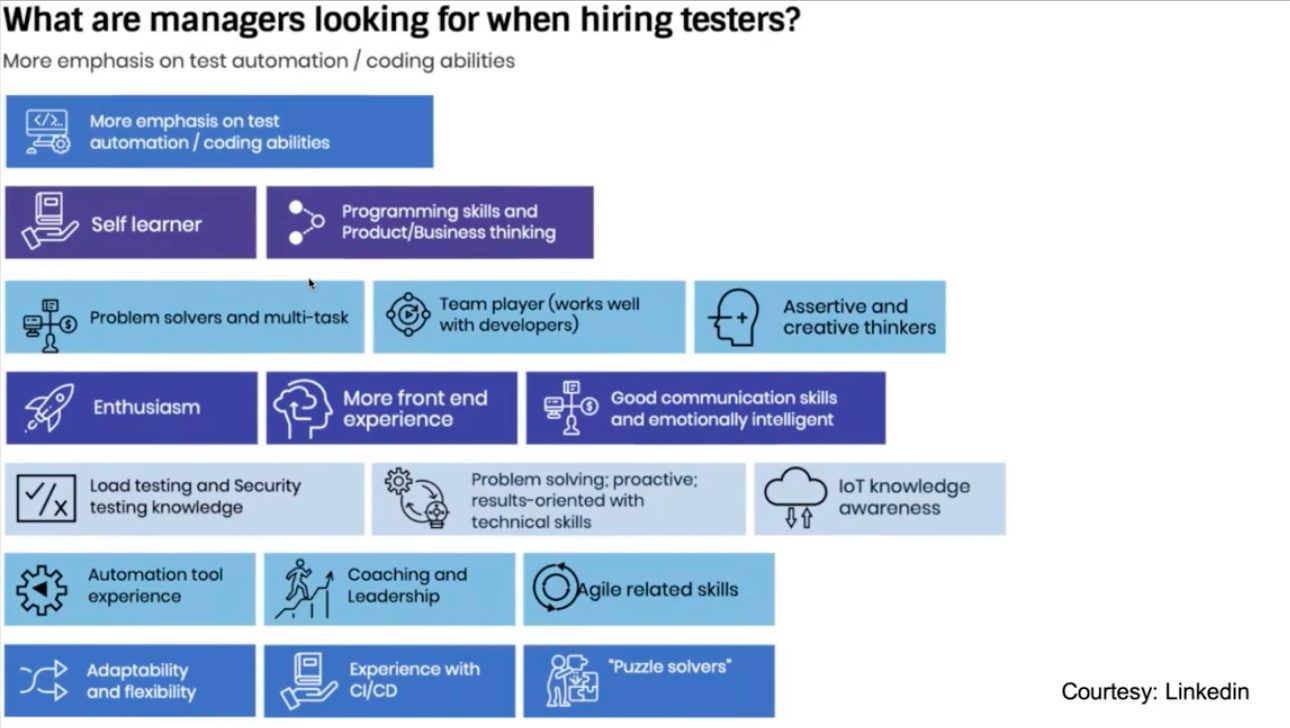

As a result, Priyanka needed to hire a team that could address these goals. She showed the profile she developed on LinkedIn to find people that met her criteria – dev-literate, test-literate engineers who could work together as a team and function successfully. More emphasis on test automation and coding abilities rose to the top.

Build a Tech Stack

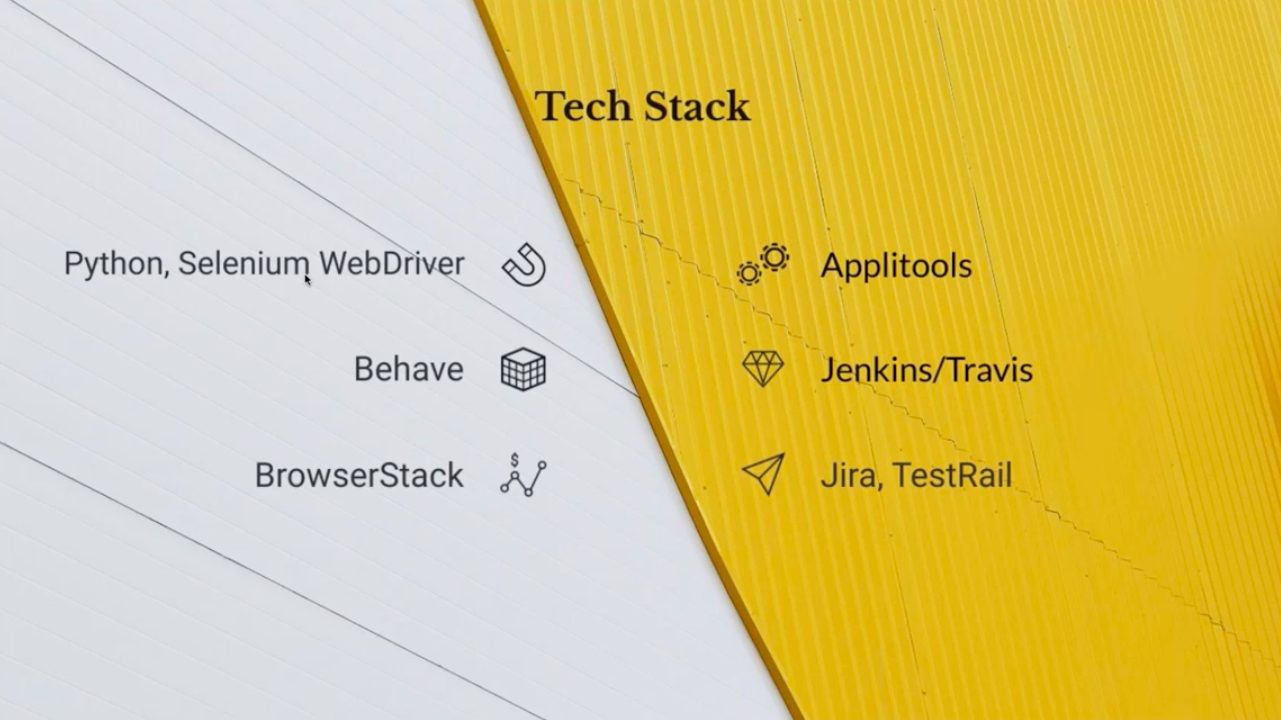

Next, Priyanka and her team invested in tech stack:

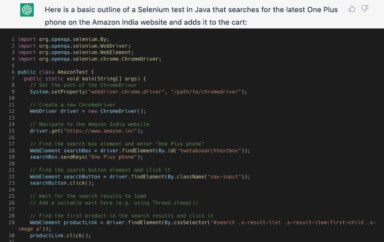

- Python and Selenium WebDriver

- Behave for BDD

- Browserstack for a cloud runner

- Applitools for visual regression

- Jenkins/Travis and Google Drone for CI

- Jira, TestRail for documentation

CICD success criteria requirements came up with four issues:

- Speed and parallelization

- BDD for easy debug and read

- Cross-browser cross-device coverage in CICD

- Visual validation

Set QA expectations for CI/CD testing

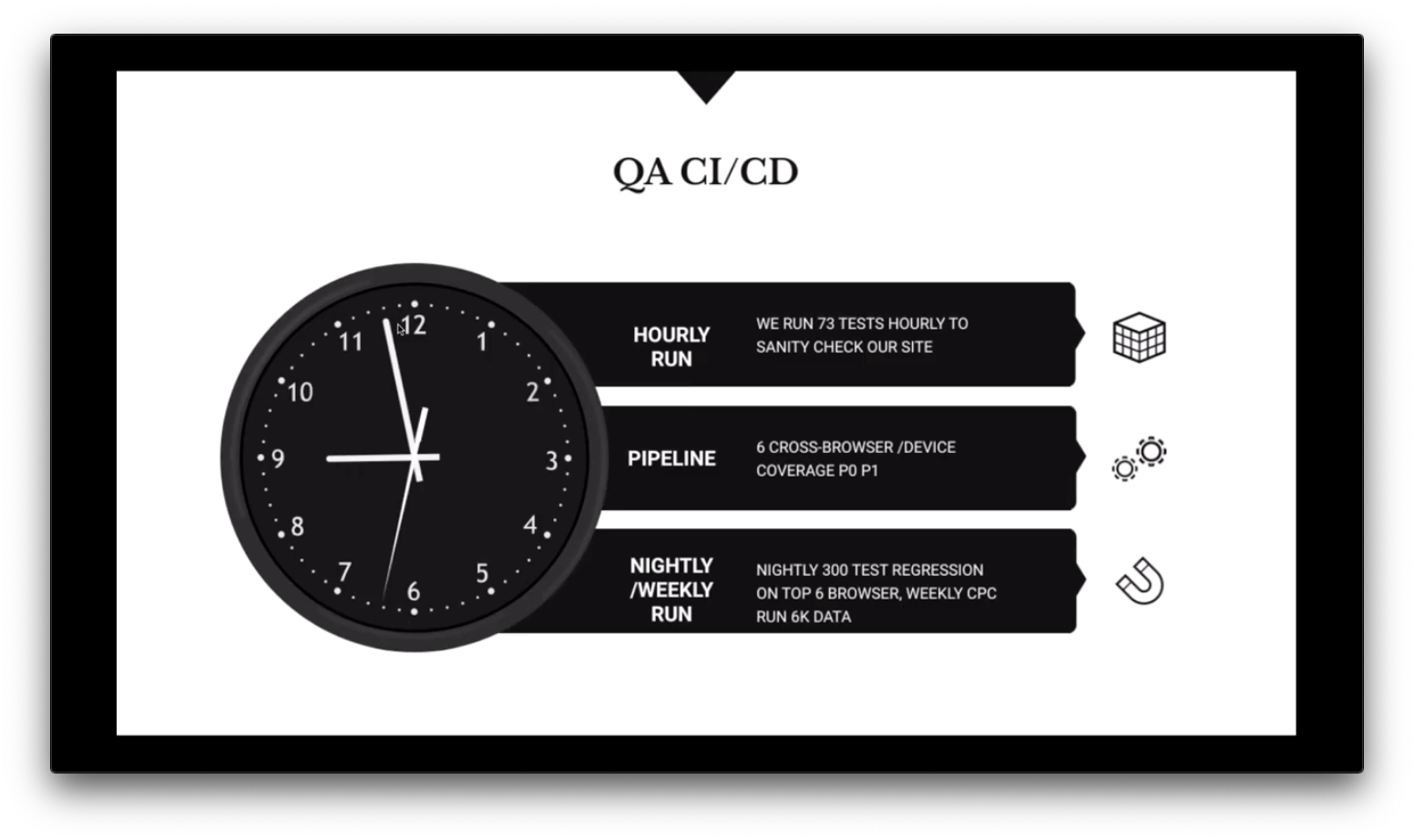

Finally, Priyanka and her team had to set expectations for testing. How often would they test? How often would they build?

The QA for CI/CD means that test and build become asynchronous. Regardless of the build state,

- Hourly; QA runs 73 tests hourly against the latest build to sanity check the site.

- On Build: Any new build runs 6 cross-browser and makes sure all critical business paths get covered.

- Nightly 300 test regression tests on top of other tests.

Some of these were starting points, but most got refined over time.

Priyanka’s GoodRx Quality Timeline

Next, Priyanka talked about how her team grew from the time she joined until now.

She started in June 2018. At that point, GoodRx had one QA engineer.

- In her first quarter, she added a QA Manager, QA Analyst, and a Senior SDET. They added offshore reprocessing to support releases.

- By October 2018 they had fully automated P0/P1 tests. Her team had added Spinnaker pipeline integration. They were running cross-browser testing with real mobile device tests.

- By December 2018 she added two more QA Analysts and 1 more SDET. Her team’s tests fully covered regression and edge cases.

- And, she pressed on. In early 2019, they had built automation-driven releases. They had added Auth0 support – her team was hyper-productive.

- Then, she discovered her team had started to burnout. Two of her engineers quit. This was an eye-opening time for Priyanka. Her lessons about burnout came from this period. She learned how to manage her team through this difficult period.

By August 2019 she had the team back on an even keel and had hired three QA engineers and one more SDET.

And, in November 2019 they achieved 100% mobile app automation support.

GoodRx Framework for High-Performance Testing

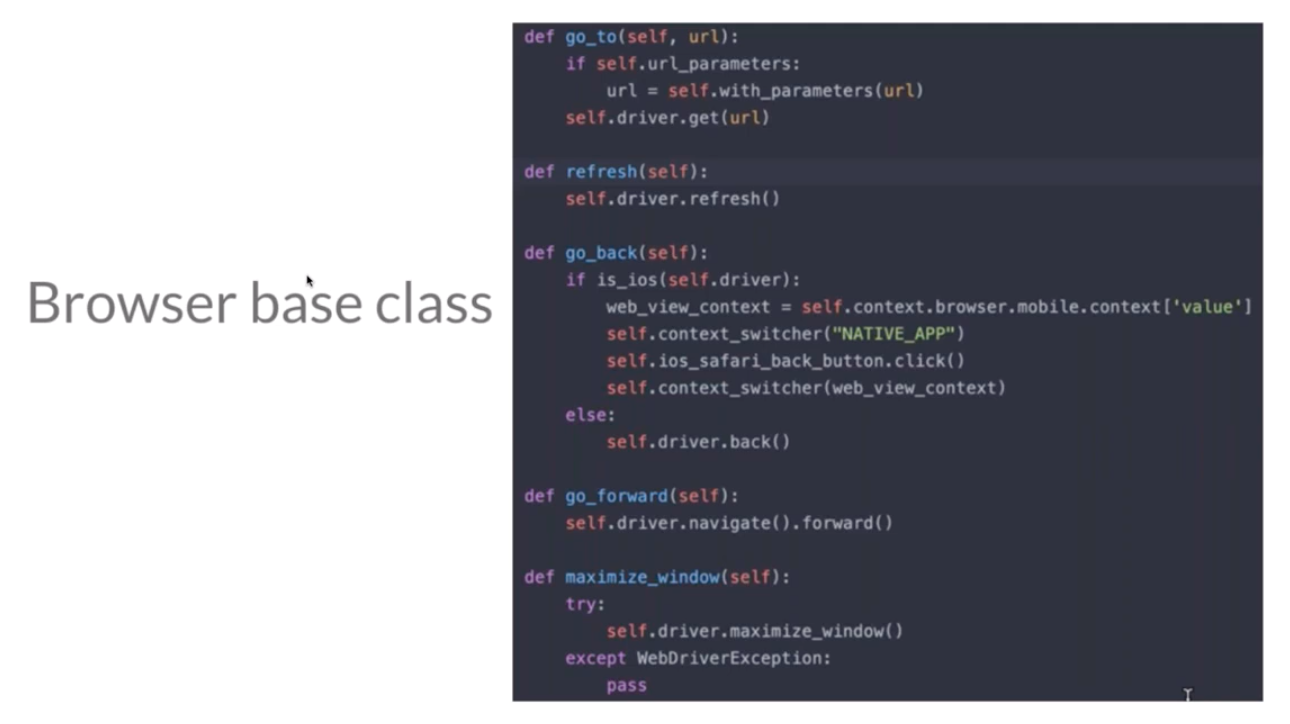

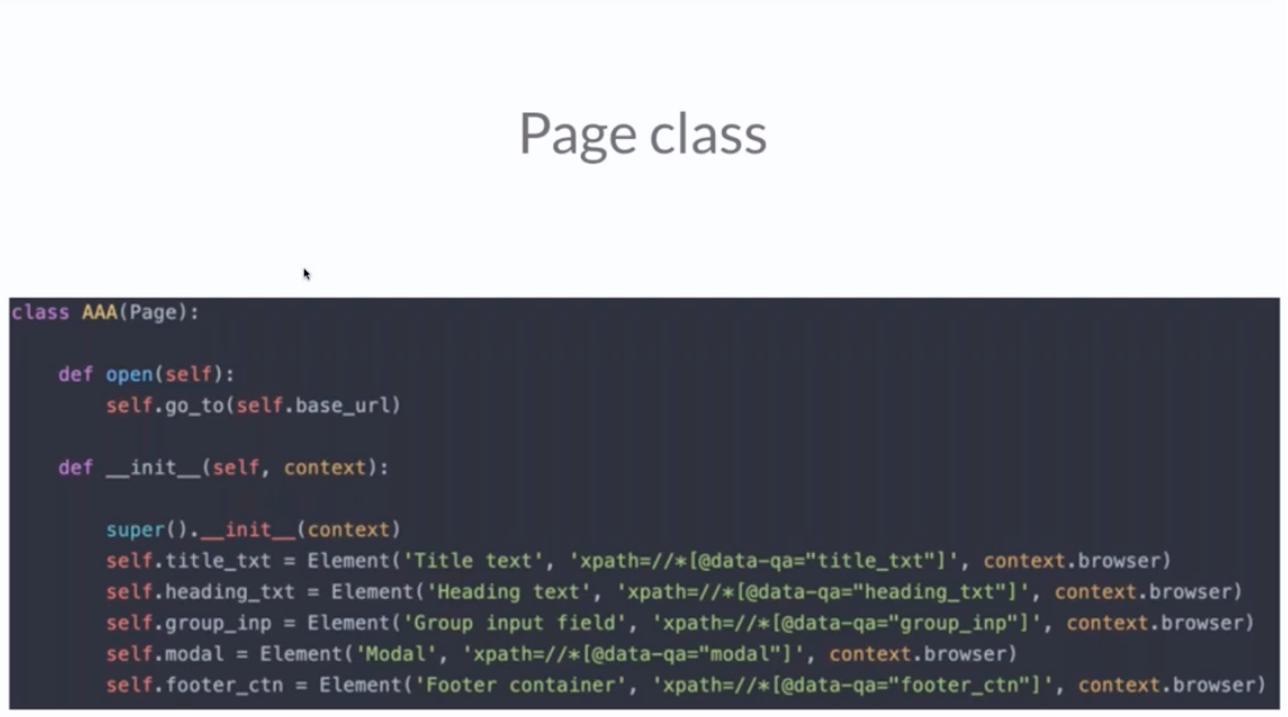

Finally, Priyanka gave a peek into the GoodRx framework, which helps her team build and maintain test automation.

The browser base class provides access for test automation. Using the browser base class eliminates the need to use Selenium embed click.

The page class simplifies the web element location. The page class structure assigns a unique XPath to each web element. Automation benefits by having clean XPath elements for automation purposes.

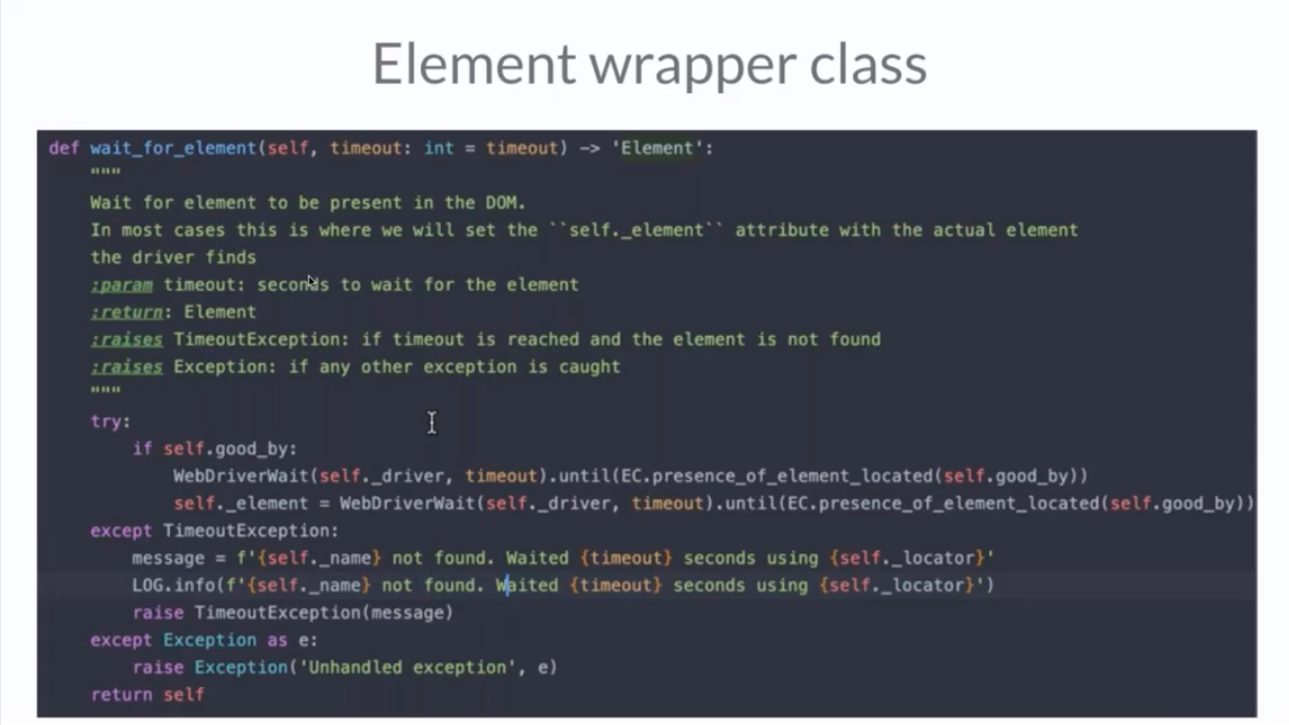

The element wrapper class allows for behaviors like lazy loading. Instead of having to program exceptions into the test code, the element wrapper class standardizes interaction between the browser under test and the test infrastructure.

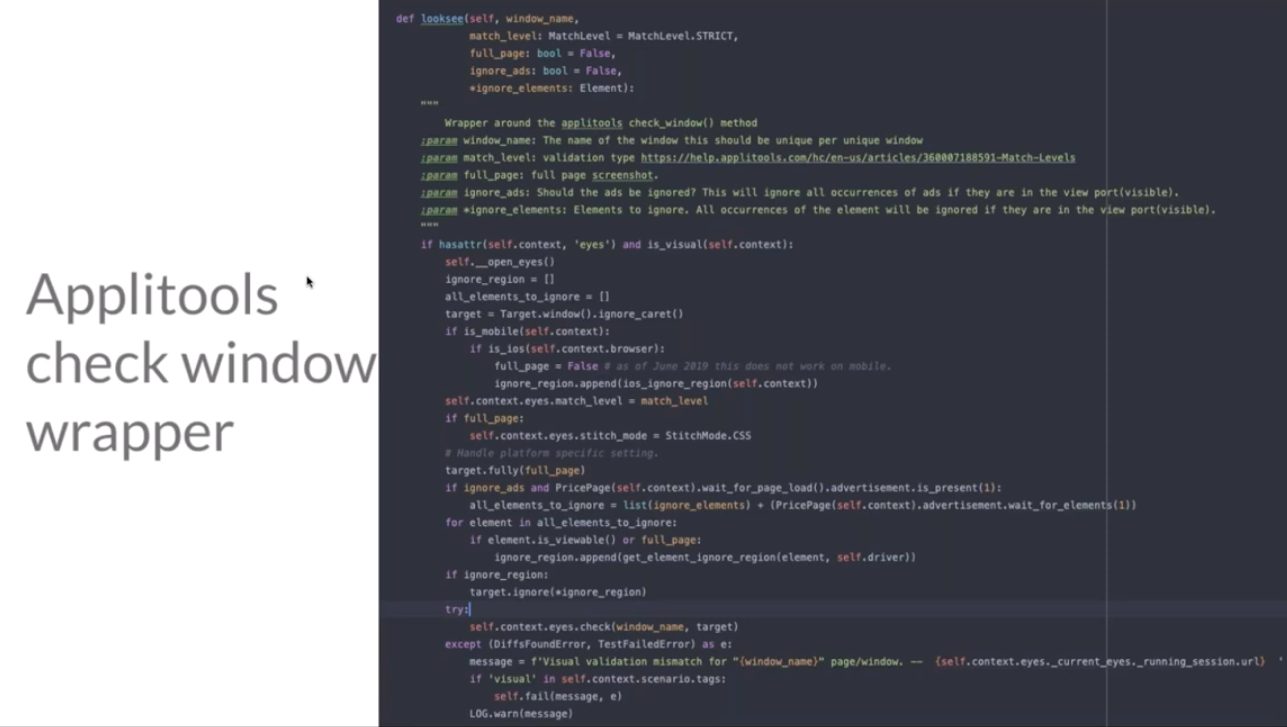

Finally, for every third-party application or tool that integrates using an SDK, like Applitools GoodRx deploys an SDK Wrapper. As one of her SDET team figured, the wrapper ensures that an SDK change from a third party can mess up your test behavior. Using a wrapper is a good practice for handling situations when the service you use encounters something unexpected.

The framework results in a more stable test infrastructure that can rapidly change to meet the growth and change demands of GoodRx.

Conclusions

Hyper-growth companies put demands on their quality engineers to achieve quickly. Test speed matters, but it cannot be achieved consistently without investment. Just as Priyanka started with the story of the Three Little Pigs, she made clear that success requires investment in automation, people, AI/ML, and framework.

To watch the entire webinar:

For More Information

- Whole Team Approach to Continous Testing (Blog)

- Whole Team Approach to Continous Testing (TAU Course)

- How Visual UI Testing can speed up DevOps flow (Blog)

- Integrate BitBucket into your Visual Testing (Blog)

- Get started with Visual Validation (Blog)

- Sign up for a free Applitools Account

- Request an Applitools demo